Limited Time 30% Discount Offer Use Code - off30

Actualkey Prepration Latest DP-600 : Implementing Analytics Solutions Using Microsoft Fabric Exam Questions and Answers PDF's, Verified Answers via Experts - Pass Your Exam For Sure and instant Downloads - "Money Back Guarantee".

| Vendor | Microsoft |

| Certification | Microsoft Fabric Analytics Engineer Associate |

| Exam Code | DP-600 |

| Title | Implementing Analytics Solutions Using Microsoft Fabric Exam |

| No Of Questions | 117 |

| Last Updated | February 21,2025 |

| Product Type | Q & A with Explanation |

| Bundel Pack Included | PDF + Offline / Andriod Testing Engine and Simulator |

About the exam

Languages Some exams are localized into other languages. You can find these in the Schedule Exam section of the Exam Details webpage. If the exam isn’t available in your preferred language, you can request an additional 30 minutes to complete the exam.

Note

The bullets that follow each of the skills measured are intended to illustrate how we are assessing that skill. Related topics may be covered in the exam.

Note

Most questions cover features that are general availability (GA). The exam may contain questions on Preview features if those features are commonly used.

Skills measured

Audience profile

As a candidate for this exam, you should have subject matter expertise in designing, creating, and deploying enterprise-scale data analytics solutions.

Your responsibilities for this role include transforming data into reusable analytics assets by using Microsoft Fabric components, such as:

Lakehouses

Data warehouses

Notebooks

Dataflows

Data pipelines

Semantic models

Reports

You implement analytics best practices in Fabric, including version control and deployment.

To implement solutions as a Fabric analytics engineer, you partner with other roles, such as:

Solution architects

Data engineers

Data scientists

AI engineers

Database administrators

Power BI data analysts

In addition to in-depth work with the Fabric platform, you need experience with:

Data modeling

Data transformation

Git-based source control

Exploratory analytics

Languages, including Structured Query Language (SQL), Data Analysis Expressions (DAX), and PySpark

Skills at a glance

Plan, implement, and manage a solution for data analytics (10–15%)

Prepare and serve data (40–45%)

Implement and manage semantic models (20–25%)

Explore and analyze data (20–25%)

Plan, implement, and manage a solution for data analytics (10–15%)

Plan a data analytics environment

Identify requirements for a solution, including components, features, performance, and capacity stock-keeping units (SKUs)

Recommend settings in the Fabric admin portal

Choose a data gateway type

Create a custom Power BI report theme

Implement and manage a data analytics environment

Implement workspace and item-level access controls for Fabric items

Implement data sharing for workspaces, warehouses, and lakehouses

Manage sensitivity labels in semantic models and lakehouses

Configure Fabric-enabled workspace settings

Manage Fabric capacity

Manage the analytics development lifecycle

Implement version control for a workspace

Create and manage a Power BI Desktop project (.pbip)

Plan and implement deployment solutions

Perform impact analysis of downstream dependencies from lakehouses, data warehouses, dataflows, and semantic models

Deploy and manage semantic models by using the XMLA endpoint

Create and update reusable assets, including Power BI template (.pbit) files, Power BI data source (.pbids) files, and shared semantic models

Prepare and serve data (40–45%)

Create objects in a lakehouse or warehouse

Ingest data by using a data pipeline, dataflow, or notebook

Create and manage shortcuts

Implement file partitioning for analytics workloads in a lakehouse

Create views, functions, and stored procedures

Enrich data by adding new columns or tables

Copy data

Choose an appropriate method for copying data from a Fabric data source to a lakehouse or warehouse

Copy data by using a data pipeline, dataflow, or notebook

Add stored procedures, notebooks, and dataflows to a data pipeline

Schedule data pipelines

Schedule dataflows and notebooks

Transform data

Implement a data cleansing process

Implement a star schema for a lakehouse or warehouse, including Type 1 and Type 2 slowly changing dimensions

Implement bridge tables for a lakehouse or a warehouse

Denormalize data

Aggregate or de-aggregate data

Merge or join data

Identify and resolve duplicate data, missing data, or null values

Convert data types by using SQL or PySpark

Filter data

Optimize performance

Identify and resolve data loading performance bottlenecks in dataflows, notebooks, and SQL queries

Implement performance improvements in dataflows, notebooks, and SQL queries

Identify and resolve issues with Delta table file sizes

Implement and manage semantic models (20–25%)

Design and build semantic models

Choose a storage mode, including Direct Lake

Identify use cases for DAX Studio and Tabular Editor 2

Implement a star schema for a semantic model

Implement relationships, such as bridge tables and many-to-many relationships

Write calculations that use DAX variables and functions, such as iterators, table filtering, windowing, and information functions

Implement calculation groups, dynamic strings, and field parameters

Design and build a large format dataset

Design and build composite models that include aggregations

Implement dynamic row-level security and object-level security

Validate row-level security and object-level security

Optimize enterprise-scale semantic models

Implement performance improvements in queries and report visuals

Improve DAX performance by using DAX Studio

Optimize a semantic model by using Tabular Editor 2

Implement incremental refresh

Explore and analyze data (20–25%)

Perform exploratory analytics

Implement descriptive and diagnostic analytics

Integrate prescriptive and predictive analytics into a visual or report

Profile data

Query data by using SQL

Query a lakehouse in Fabric by using SQL queries or the visual query editor

Query a warehouse in Fabric by using SQL queries or the visual query editor

Connect to and query datasets by using the XMLA endpoint

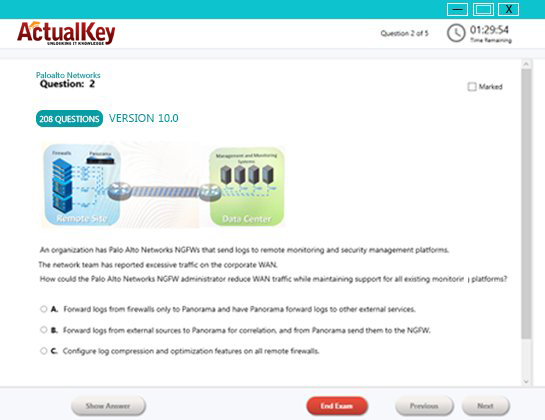

Sample Questions and Answers

QUESTION 1

What should you recommend using to ingest the customer data into the data store in the

AnatyticsPOC workspace?

A. a stored procedure

B. a pipeline that contains a KQL activity

C. a Spark notebook

D. a dataflow

Answer: D

QUESTION 2

Which type of data store should you recommend in the AnalyticsPOC workspace?

A. a data lake

B. a warehouse

C. a lakehouse

D. an external Hive metaStore

Answer: C

QUESTION 3

You are the administrator of a Fabric workspace that contains a lakehouse named Lakehouse1.

Lakehouse1 contains the following tables:

Table1: A Delta table created by using a shortcut

Table2: An external table created by using Spark

Table3: A managed table

Answer: A

QUESTION 4

You plan to connect to Lakehouse1 by using its SQL endpoint. What will you be able to do after connecting to Lakehouse1?

A. ReadTable3.

B. Update the data Table3.

C. ReadTable2.

D. Update the data in Table1.

Answer: D

QUESTION 5

You have a Fabric tenant that contains a warehouse.

You use a dataflow to load a new dataset from OneLake to the warehouse.

You need to add a Power Query step to identify the maximum values for the numeric columns.

Which function should you include in the step?

A. Table. MaxN

B. Table.Max

C. Table.Range

D. Table.Profile

Answer: B

QUESTION 6

You have a Fabric tenant that contains a machine learning model registered in a Fabric workspace.

You need to use the model to generate predictions by using the predict function in a fabric notebook.

Which two languages can you use to perform model scoring? Each correct answer presents a complete solution.

NOTE: Each correct answer is worth one point.

A. T-SQL

B. DAX EC.

C. Spark SQL

D. PySpark

Answer: C, D

QUESTION 7

You are analyzing the data in a Fabric notebook.

You have a Spark DataFrame assigned to a variable named df.

You need to use the Chart view in the notebook to explore the data manually.

Which function should you run to make the data available in the Chart view?

A. displayMTML

B. show

C. write

D. display

Answer: D

I Got My Success Due To Actualkey DP-600 Bundle Pack Actualkey experts I got passed in the DP-600 exam without any worries at all, these exam material products gave me the reason to relax.

Budi Saptarmat

Yahoo! Got Successfully Through The DP-600 Exam Passing Exam is not a easy thanks to Acutalkey.com for providing me actual DP-600 Implementing Analytics Solutions Using Microsoft Fabric Exam training with there included the Offline and Android simulators helps me success

Melinda

DP-600 Exam Best Preparation I have been preparing for DP-600 Implementing Analytics Solutions Using Microsoft Fabric Exam, I was not sure that I'll be able to pass because of the fact that I am not a good student however;Actualkey.com provided me best and simple exam training pdf's and I passed. I now recommend everyone

Antonio Moreno

Actualkey.com DP-600 Offline Simulator is Best My choice to select Actualkey.com and go for the preparation DP-600 Implementing Analytics Solutions Using Microsoft Fabric Exam, because I got the short way with the easy way

Liliane Meichner

Actualkey.com DP-600 Exam PDF"s passed with in a week DP-600 exam pdf's that's amazing

James Wilson

Microsoft - RELATED EXAMS

Designing a Database Server Infrastructure by Using Microsoft SQL Server 2005

Questions: 92 Questions | September 16, 2024

Optimizing and Maintaining a Database Administration Solution by Using SQL Server 2005

Questions: 215 | September 16, 2024

UPGRADE: MCDBA Skills to MCITP Database Administrator by Using Microsoft SQL Server 2005

Questions: 186 Questions | September 16, 2024

Microsoft .NET Framework 2.0 - Windows-Based Client Development

Questions: 245 Questions | September 16, 2024

Microsoft .NET Framework 2.0 - Distributed Application Development

Questions: 144 | September 16, 2024

Microsoft .NET Framework 2.0-Application Development Foundation

Questions: 456 | September 16, 2024

Designing and Developing Web-Based Applications by Using the Microsoft .NET Framework

Questions: 74 | September 16, 2024

Designing and Developing Windows-Based Applications by Using the Microsoft .NET Framework

Questions: 72 Questions | September 16, 2024

Designing and Developing Enterprise Applications by Using the Microsoft .NET Framework

Questions: 86 | September 16, 2024

UPGRADE: MCAD Skills to MCPD Web Developer by Using the Microsoft .NET Framework

Questions: 584 | September 16, 2024

UPGRADE: MCAD Skills to MCPD Windows Developer by Using the Microsoft .NET Framework

Questions: 559 | September 16, 2024

UPGRADE: MCSD Microsoft .NET Skills to MCPD Enterprise Application Developer: Part 1

Questions: 609 | September 16, 2024

UPGRADE: MCSD Microsoft .NET Skills to MCPD Enterprise Application Developer: Part 2

Questions: 168 | September 16, 2024

TS: Deploying and Maintaining Windows Vista Client and 2007 Microsoft Office System Desktops

Questions: 92 | September 16, 2024

Windows Server 2008 Applications Infrastructure, Configuring

Questions: 494 | September 16, 2024

TS: Upgrading from Windows Server 2003 MCSA to, Windows Server 2008, Technology Specializations

Questions: 576 | September 16, 2024

Designing a Microsoft Office Enterprise Project Management (EPM) Solution

Questions: 50 Questions | September 16, 2024

Customizing Portal Solutions with Microsoft SharePoint Products and Technologies

Questions: 75 | September 16, 2024

Deploying Business Desktops with Microsoft Windows Server 2003 and Microsoft Office 2003

Questions: 53 | September 16, 2024

Implementing and Administering Security in a Microsoft Windows Server 2003 Network

Questions: 288 | September 16, 2024

Designing, Deploying, and Managing a Network Solution for a Small- and Medium-Sized Business

Questions: 204 | September 16, 2024

Supporting Users and Troubleshooting a Microsoft Windows XP Operating System

Questions: 114 | September 16, 2024

TS: Microsoft SQL Server 2008, Business Intelligence Development and Maintenance

Questions: 399 | September 16, 2024

PRO: Designing, Optimizing and Maintaining a Database Administrative Solution Using Microsoft SQL Server 2008

Questions: 189 | September 16, 2024

Developing E-Business Solutions Using Microsoft BizTalk Server 2004

Questions: 40 | September 16, 2024

Developing Microsoft Office Solutions Using XML with Office Professional Edition 2003

Questions: 50 | September 16, 2024

Planning and Building a Messaging and Collaboration Environment Using Microsoft Office System and Microsoft Windows Server 2003

Questions: 61 | September 16, 2024

TS: Microsoft .NET Framework 3.5, ADO.NET Application Development

Questions: 287 | September 16, 2024

TS: Microsoft .NET Framework 3.5, ASP.NET Application Development

Questions: 364 | September 16, 2024

TS: Microsoft Office Project Server 2007, Managing Projects

Questions: 145 | September 16, 2024

TS: Microsoft .NET Framework 3.5, Windows Forms Application Development

Questions: 48 | September 16, 2024

Upgrade: Transition Your MCITP SQL Server 2005 DBA to MCITP SQL Server 2008

Questions: 98 | September 16, 2024

Pro: Designing and Deploying Messaging Solutions with Microsoft Exchange Server 2010

Questions: 379 | July 1, 2024

Pro: Designing and Developing ASP.NET Applications Using the Microsoft .NET Framework 3.5

Questions: 281 | September 16, 2024

TS: Microsoft SQL Server 2008, Implementation and Maintenance

Questions: 328 | September 16, 2024

Microsoft System Center Configuration Manager 2007,Configuring

Questions: 184 | September 16, 2024

PRO: Designing and Developing Microsoft SharePoint 2010 Applications

Questions: 200 | September 16, 2024

Upgrading to Windows 7 MCITP Enterprise Desktop Support Technician

Questions: 50 | September 16, 2024

TS: Windows Applications Development with Microsoft .NET Framework 4

Questions: 278 | September 16, 2024

TS: Windows Communication Foundation Development with Microsoft .NET Framework 4

Questions: 473 | September 16, 2024

TS: Web Applications Development with Microsoft .NET Framework 4

Questions: 405 | September 16, 2024

Pro: Designing and Developing Web Applications Using Microsoft .NET Framework 4

Questions: 288 | September 16, 2024

TS: Developing Business Process and Integration Solutions by Using Microsoft BizTalk Server 2010

Questions: 100 | September 16, 2024

Designing and Providing Microsoft Volume Licensing Solutions to Small and Medium Organizations

Questions: 232 | September 16, 2024

TS: Forefront Protection for Endpoints and Applications, Configuring

Questions: 105 | September 16, 2024

Upgrade: Transition Your MCITP SQL Server 2005 DBD to MCITP SQL Server 2008 DBD

Questions: 154 | July 1, 2024

Pro: Windows Server 2008 R2, Virtualization Administrator

Questions: 176 | September 16, 2024

PRO: Designing Database Solutions and Data Access Using Microsoft SQL Server 2008

Questions: 183 | July 1, 2024

Managing and Maintaining a Microsoft Windows Server 2003 Environment

Questions: 450 | July 1, 2024

Implementing Data Models and Reports with Microsoft SQL Server 2012

Questions: 330 | July 1, 2024

Implementing a Data Warehouse with Microsoft SQL Server 2012

Questions: 322 | September 16, 2024

Transition Your MCTS on SQL Server 2008 to MCSA: SQL Server 2012, Part 2

Questions: 300 | September 16, 2024

Configuring and Deploying a Private Cloud with System Center 2012

Questions: 462 | September 16, 2024

Monitoring and Operating a Private Cloud with System Center 2012

Questions: 457 | September 16, 2024

Administering and Deploying System Center 2012 Configuration Manager

Questions: 208 | September 16, 2024

Microsoft Dynamics AX 2012 Process Manufacturing Production and Logistics

Questions: 149 | July 1, 2024

Advanced Metro style App Development using HTML5 and JavaScript

Questions: 225 | September 16, 2024

Transition Your MCTS on SQL Server 2008 to MCSA: SQL Server 2012, Part 1

Questions: 230 | September 16, 2024

Transition Your MCITP: Database Administrator 2008 or MCITP: Database Developer 2008 to MCSE: Data Platform

Questions: 261 | September 16, 2024

Transition Your MCITP: Business Intelligence Developer 2008 to MCSE: Business Intelligence

Questions: 132 | September 16, 2024

Designing Database Solutions for Microsoft SQL Server 2012

Questions: 231 | September 16, 2024

Designing Business Intelligence Solutions with Microsoft SQL Server 2012 Exam

Questions: 314 | September 16, 2024

Microsoft Programming in HTML5 with JavaScript and CSS3 Exam

Questions: 342 | September 16, 2024

Delivering Continuous Value with Visual Studio 2012 Application Lifecycle Management

Questions: 219 | July 1, 2024

Enterprise Voice & Online Services with Microsoft Lync Server 2013

Questions: 158 | September 16, 2024

Developing Microsoft SharePoint Server 2013 Core Solutions

Questions: 181 | September 16, 2024

Upgrade your MCPD: Web Developer 4 to MCSD: Web Applications

Questions: 229 | September 16, 2024

Essentials of Developing Windows Metro style Apps using C#

Questions: 168 | September 16, 2024

Server Virtualization with Windows Server Hyper-V and System Center

Questions: 149 | September 16, 2024

Essentials of Developing Windows Metro style Apps using HTML5 and JavaScript

Questions: 166 | September 16, 2024

TS: Windows Small Business Server 2011 Standard, Configuring

Questions: 55 | September 16, 2024

TS: MS Internet Security & Acceleration Server 2006, Configuring

Questions: 80 | September 16, 2024

TS: Microsoft System Center Operations Manager 2007, Configuring

Questions: 94 | September 16, 2024

TS: System Center Virtual Machine Manager 2008, Configuring

Questions: 45 | September 16, 2024

PRO: Designing a Business Intelligence Infrastructure Using Microsoft SQL Server 2008

Questions: 115 | September 16, 2024

Upgrade: Transition Your MCITP SQL Server 2005 BI Developer to MCITP SQL Server 2008 BI Developer

Questions: 203 | September 16, 2024

Recertification for MCSD: Application Lifecycle Management

Questions: 292 | September 16, 2024

TS: Microsoft .NET Framework 3.5 Windows Presentation Foundation Application Development

Questions: 101 | September 16, 2024

TS: Microsoft .NET Framework 3.5 - Windows Communication Foundation

Questions: 270 | September 16, 2024

TS: Visual Studio Team Foundation Server 2010, Administration

Questions: 72 | September 16, 2024

Pro: Designing and Developing Windows Applications Using Microsoft .NET Framework 4

Questions: 239 | September 16, 2024

TS: Microsoft Windows SharePoint Services 3.0 Application Development

Questions: 109 | September 16, 2024

Upgrade: Transition your MCPD Enterprise Application Developer Skills to MCPD Enterprise Application Developer 3.5, Part 1

Questions: 153 | September 16, 2024

UPGRADE: Transition your MCPD Enterprise Application Developer Skills to MCPD Enterprise Application Developer 3.5, Part 2

Questions: 123 | September 16, 2024

TS: System Center Data Protection Manager 2007, Configuring

Questions: 74 | September 16, 2024

Designing and Providing Microsoft Volume Licensing Solutions to Large Organizations

Questions: 126 | September 16, 2024

TS: Designing, Assessing, and Optimizing Software Asset Management (SAM)

Questions: 85 | September 16, 2024

MS Office Communication Server 2007-U.C Voice Specialization

Questions: 174 | September 16, 2024

Microsoft Office Communications Server 2007 R2 U.C. Voice Specialization

Questions: 101 | September 16, 2024

Windows Server 2008 Hosted Environments, Configuring and Managing

Questions: 75 | September 16, 2024

Designing and Providing Microsoft Volume Licensing Solutions to Large Organisations

Questions: 104 | September 16, 2024

Pro: Designing and Developing Windows Applications Using the Microsoft .NET Framework 3.5

Questions: 105 | July 1, 2024

Pro: Designing and Developing Enterprise Applications Using the Microsoft .NET Framework 3.5

Questions: 152 | September 16, 2024

Universal Windows Platform – App Data, Services, and Coding Patterns (beta)

Questions: 47 | September 16, 2024

Universal Windows Platform – App Architecture and UX/UI (beta)

Questions: 76 | September 16, 2024

Microsoft Dynamics AX 2012 R3 CU8 Installation and Configuration

Questions: 48 | July 1, 2024

Designing and Deploying Microsoft Exchange Server 2016 Exam

Questions: 166 | September 16, 2024

Introduction to Programming Using Block-Based Languages (Touch Develop)

Questions: 72 | July 1, 2024

Development, Extensions and Deployment for Microsoft Dynamics 365 for Finance and Operations

Questions: 90 | July 1, 2024

Financial Management in Microsoft Dynamics 365 for Finance and Operations

Questions: 73 | July 1, 2024

Designing and Providing Microsoft Licensing Solutions to Large Organizations

Questions: 195 | July 1, 2024

Distribution and Trade in Microsoft Dynamics 365 for Finance and Operations

Questions: 93 | July 1, 2024

Administering Microsoft System Center Configuration Manager and Cloud Services Integration

Questions: 150 | July 1, 2024

Microsoft Configuring and Operating a Hybrid Cloud with Microsoft Azure Stack Exam

Questions: 99 | July 1, 2024

Microsoft Azure Solutions Architect Certification Transition Exam

Questions: 393 | July 1, 2024

Outlook 2016: Core Communication, Collaboration and Email Skills

Questions: 35 | July 1, 2024

Microsoft Developing Solutions for Microsoft Azure Exam

Questions: 170 / 6 Case Study | July 1, 2024

Designing and Implementing a Data Science Solution on Azure Exam

Questions: 442 | December 3, 2024

Microsoft 365 Teamwork Administrator Certification Transition Exam

Questions: 120 | July 1, 2024

Microsoft Messaging Administrator Certification Transition Exam

Questions: 155 | July 1, 2024

Microsoft Excel 2016: Core Data Analysis, Manipulation, and Presentation Exam

Questions: 35 | November 8, 2024

Microsoft Word 2016: Core Document Creation, Collaboration and Communication Exam

Questions: 35 | November 8, 2024

Microsoft Dynamics 365 for Finance and Operations, Supply Chain Management Exam

Questions: 394 | November 25, 2024

Microsoft Dynamics 365 for Finance and Operations, Manufacturing Exam

Questions: 207 | November 8, 2024

Building Applications and Solutions with Microsoft 365 Core Services Exam

Questions: 242 | July 1, 2024

Microsoft Dynamics 365: Finance and Operations Apps Solution Architect Exam

Questions: 295 | February 18, 2025

Planning and Administering Microsoft Azure for SAP Workloads Exam

Questions: 652 | July 1, 2024

Microsoft Dynamics 365: Finance and Operations Apps Developer Exam

Questions: 283 | February 21, 2025

Administering Relational Databases on Microsoft Azure (beta) Exam

Questions: 341 | December 13, 2024

Microsoft Dynamics 365 Business Central Functional Consultant (beta) Exam

Questions: 196 | March 8, 2025

Microsoft Power Platform Functional Consultant (beta) Exam

Questions: 289 | February 18, 2025

Configuring and Operating a Hybrid Cloud with Microsoft Azure Stack Hub Exam

Questions: 176 | July 1, 2024

Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM) (beta) Exam

Questions: 159 | October 12, 2024

Microsoft Dynamics 365 Fundamentals Finance and Operations Apps (ERP) Exam

Questions: 146 | February 14, 2025

Configuring and Operating Windows Virtual Desktop on Microsoft Azure Exam

Questions: 207 | February 20, 2025

Designing and Implementing a Microsoft Azure AI Solution (beta) Exam

Questions: 321 | December 2, 2024

Designing and Implementing Microsoft Azure Networking Solutions Exam

Questions: 294 | March 1, 2025

Designing Microsoft Azure Infrastructure Solutions (beta) Exam

Questions: 324 | January 16, 2025

Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB (beta) Exam

Questions: 140 | January 28, 2025

Configuring Windows Server Hybrid Advanced Services (beta) Exam

Questions: 157 | October 22, 2024

Administering Windows Server Hybrid Core Infrastructure (beta) Exam

Questions: 334 | September 2, 2024

Microsoft Designing and Implementing Enterprise-Scale Analytics Solutions Using Microsoft Azure and Microsoft Power BI Exam

Questions: 160 | July 1, 2024

Microsoft Dynamics 365 Supply Chain Management Functional Consultant Expert Exam

Questions: 152 | August 20, 2024

Implementing Analytics Solutions Using Microsoft Fabric Exam

Questions: 117 | February 21, 2025

Implementing Data Engineering Solutions Using Microsoft Fabric Exam

Questions: 67 | January 9, 2025

Exams code, certifications, vendor or keywords

![]()

Copyright © 2009 - 2025 Actualkey. All rights reserved.